Introduction

Some of the most important distinctive features between 3 types of cancer - Pheochromocytoma & Paraganglioma (PCPG) , Pancreatic Cancer (PAAD) and Acute Myeloid Leukaemia (LAML) – are presented in this report. These cancers were chosen since their data is roughly balanced with number of samples ranging between 179 and 195. For each cancer type three datasets were analysed: DNA methylation450k profile (methylation dataset) – irregular function of methylation can result in inactivation of tumour-suppressor genes and cause cancer ; exon expression profile (exon dataset) – skipping of exons in the encoded transcripts can lead to cancerous mutations ; gene expression profile (gene dataset) – normal and cancer cells have different expression profiles .

Firstly, the datasets of similar features for the three cancer types were merged. The data was then standardised, and seven different classifiers were evaluated on the full datasets. Best features were then extracted by observing the most important nodes in decision trees and random forests and the biggest weights in linear discriminative classifiers. A second approach using Recursive Feature Elimination (RFE) was also introduced. Classification was then performed with the best features only. The classification results were compared with the full dataset classification and the extracted genes were compared with existing literature. The Python packages matplotlib, seaborn, pandas, NumPy and scikit-learn were used.

Data Pre-Processing

The datasets consisted of missing values so these were replaced with the mean of the feature – this type of missing value handling is suitable for linear models and continuous data. Features with no values were completely removed. A label was added to each class. Then, the methylation datasets were stacked for the three cancer types by only keeping the common features. The same was done to the exon and gene datasets. Lastly, the data was standardised using the robust scaler in scikit-learn. This scaler gives better results on data with outliers when compared to zero mean and unit variance scaling. This was also noticed while scoring different classifiers.

The resulting datasets were of shapes 576×396067 for the methylation dataset, 543×239324 for the exon dataset and 543×20532 for the gene dataset.

Full Dataset Classification

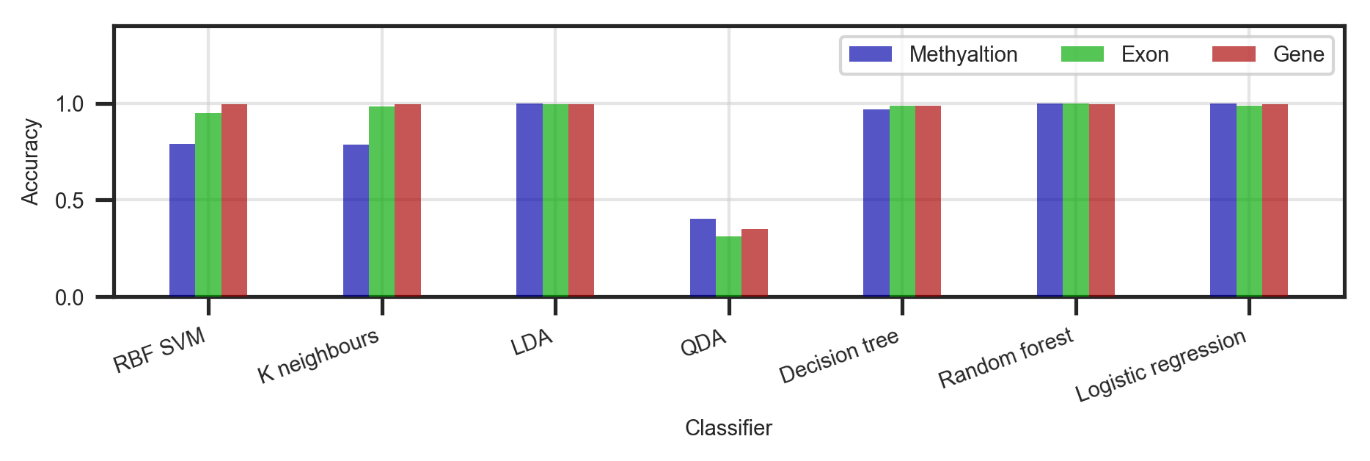

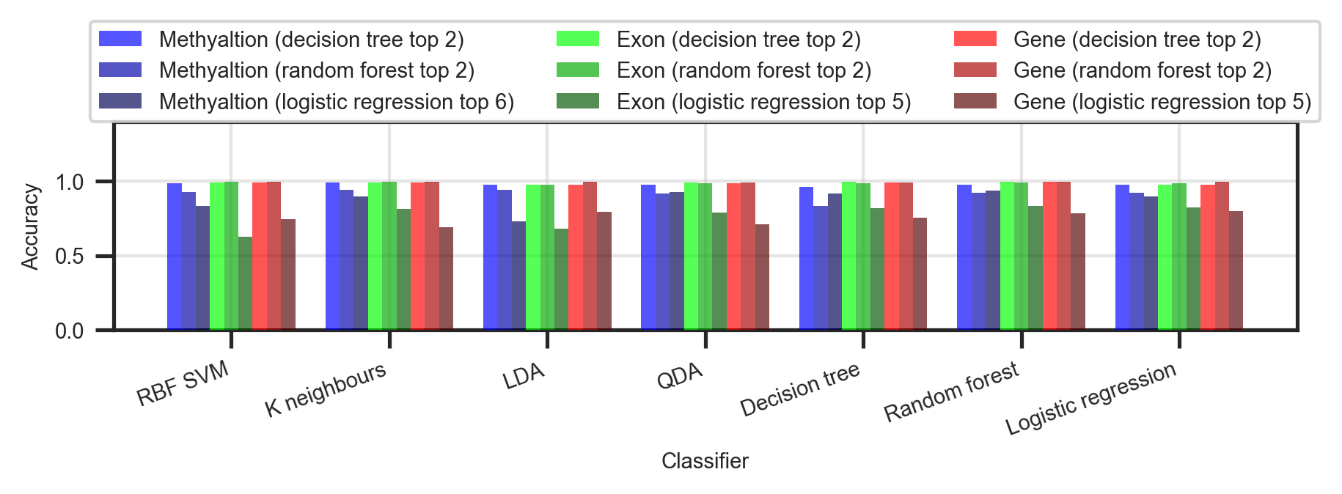

Initially, seven estimators (three linear and four non-linear) were trained on 67% of the three full datasets (Figure ). This was done to check if any classification can be done at all; how do the different classifiers perform; how do the different datasets perform. The classifiers were then tested on the remaining 33%.

In general, all datasets show promising results. The gene dataset achieves the best accuracy across the board, closely followed by the exon and methylation datasets. When it comes to classifiers – LDA, decision tree, random forest and logistic regression give solid results every time with an average accuracy of 99.3%.

Best Features Selection

The first approach in extracting the best features was by running LDA, logistic regression and linear SVM and observing the features that have the highest weights. Furthermore, classification was done with decision trees and random forests and the features from the top few nodes were taken. In general, the classifiers produced very different results. The figures below present the patterns that were noticed.

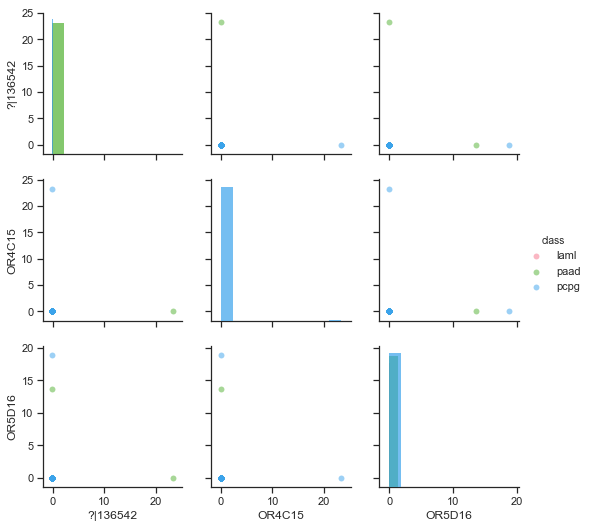

By far, LDA produced the worst results. By its nature, LDA tries to project the data in the most discriminative direction. In particular, it tries to maximise the between-class scatter and minimise the within-class scatter. It was found that the datasets consisted of many features that are all zeros (instead of N/A) which were kept after pre-processing. What is more, there were features with only a few values and the rest of them were substituted with the mean of the feature. Unfortunately, these single-valued features are put first by LDA (Figure ). Therefore, LDA is not good for feature selection.

Some methods for reducing this problem in LDA might be: iteratively removing the best features if their values are a confined in one point; removing features that have many N/As during pre-processing; filling up N/As with a random variable based on a mean and standard deviation rather than with a constant. These methods were not tried since the other classifiers below gave better results.

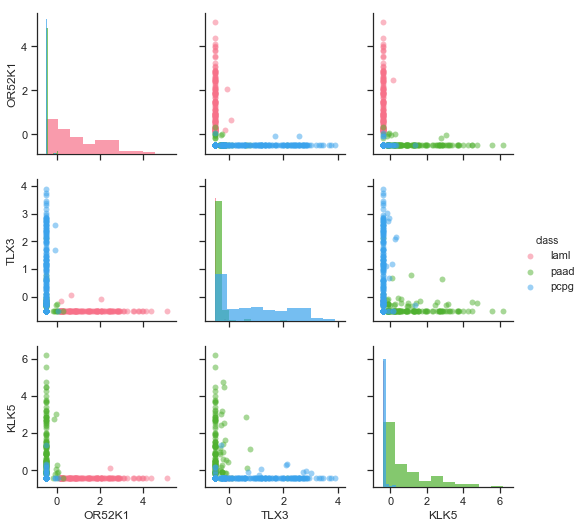

More sensible results were achieved with logistic regression and linear SVM. After numerous runs, a trend was noticed that both classifiers find features that have a strong expression in only one of the cancers (Figure ). However, in all cases the features of the three classes were overlapping making reliable classification impossible with a reasonably low number of features. The best features were selected by taking the top 2 features from each class (so 6 features in total).

Out of all, the decision trees and the random forests found the best features in terms of separability. They picked features which are well separated, with no overlaps, that can be classified with a decision boundary parallel to one of the basis vectors (Figure ). These features were selected by taking all features with an importance of more than 0.1 from the classifiers (usually the top 2-5 nodes of the tree).

The approach discussed so far is based on training an estimator once and observing the top features. However, the top features are different after each training based on the random initial state of the classifier and the random train/test split of the data. This is also due to the fact that out of all features, many have similar properties. As an attempt to minimise this issue, a new algorithm was written based on Recursive Feature Elimination (RFE) . This algorithm trains a model first and filters out the 5% worst features until a certain number of features is reached (3 in this case). The RFE was ran with a logistic regression, decision tree and linear SVM classifiers 20 times, while at each iteration the train/test data was reshuffled, and the initial state of the classifier was reset. To ensure randomness, a new random seed was generated at each run based on the current time. The number of occurrences of each feature throughout the iterations was then counted (Table ). This approach was used on the gene dataset only because: the gene dataset is best for classification; it is the smallest dataset and it runs this heavier algorithm in a more reasonable time. Interestingly, this time the classifiers found features which occurred multiple times (as opposed to different features at each training like in the previous approach).

Reduced Dataset Classification

The reduced datasets were then used to train the same classifiers from section 3, test their accuracy and compare it with the accuracy from the full dataset classification. Among all classifiers for feature selection, logistic regression produced features that result in the worst classification (Figure ). The decision tree and random forest classifiers produced features that result in comparably good classification. In terms of a dataset, all have comparable results.

When compared to the full dataset classification, the reduced datasets managed to produce very similar accuracy (lower by an average of <1%) with just a few features. The <1% drop in accuracy is due to a low number of outliers that cause overlapping.

With the RFE approach, the decision tree reduced dataset settled on an average accuracy of >99%, the SVM reduced dataset on 92%, and the logistic regression reduced dataset on 81%.

Comparison with Other Literature

One of the top features (cg05973398) – found by a decision tree from the methylation dataset with an importance of 48% - corresponds to the RUNX1 gene. Numerous papers , have agreed that mutations in the RUNX1 cause predisposition to LAML. The first approach for feature selection found 25 other genes, but none of them were recognised by other literature to be related to cancer.

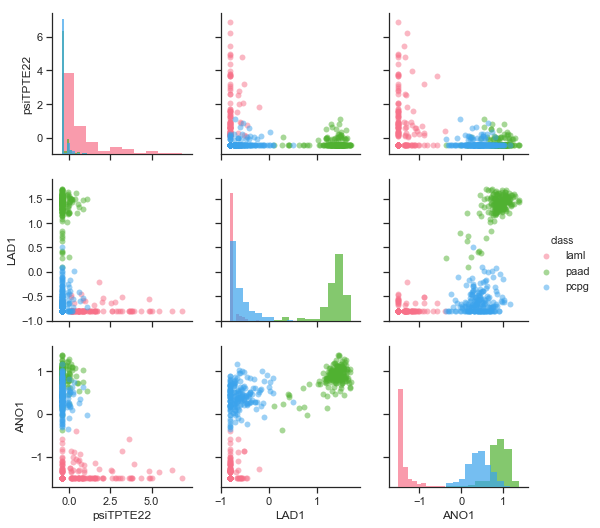

The second approach (RFE) found 8 genes. From them the TLX3, KLK5, LAD1, ANO1 and PNLIPRP1 genes have been classified as related to cancer by the Human Protein Atlas . According to this source, the TLX3 (T-cell leukaemia homeobox) gene is associated with T-cell acute lymphoblastic leukaemia. LAD1, ANO1 and PNLIPRP1 are known to be related to pancreatic cancer (strong expression of these can be seen in Figure for this cancer).

Conclusion

Two approaches for best features extraction were proposed. The first one outputted noisy results – that is, the same features would not come up multiple times after rerunning the algorithm. The features were good for classification between the three classes, but out of all only one of them was recognised to be related to cancer. The second approach significantly reduced the noisiness problem and the features that were selected were much more consistent between multiple runs. Apart from being good for classification, many of these features were also recognised by external sources to be related to some of the three cancers that are reviewed in this work.

When comparing the three datasets, all of them gave comparable results. However, the gene dataset had the advantage of being smaller and more convenient to work with. For feature selection, LDA produced the worst results. The tree based algorithms found features that are best separated and produce classification accuracies of over 99% with just two features. Logistic regression and linear SVM outputted features that have a strong expression in just one class but are worse for classification (due to overlapping).

Although the algorithms found best features that can be used for distinguishing between the different cancers, this does not necessarily mean that they are related to that cancer. The reason of these features being more apparent in one class than in the others is likely to be because they were also collected from different types of cells so the classifiers may actually be classifying different types of cells rather than cancers. As a suggestion for improvement, better results will probably be achieved by running the feature selectors with RFE on two datasets from the same organ: one dataset containing data from normal cells, the other containing data from cancer cells. This way, the expression difference due to the data being from different cell types will be removed, and the only difference will be due to the cancer.